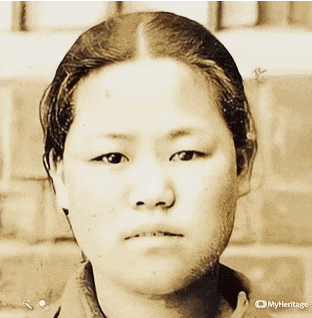

Recently, the appearances of great men in history reproduced through deep fake technology has become a hot topic. During the last Samiljeol, Independence Movement Day (March 1), videos of patriotic officers such as Yoo Gwan-soon and Kim Goo, were recreated using deep fake technology, and spread across the online community, making a long-lasting impression on viewers.

|

| ▲ Yoo Gwan-soon's deep fake image (Photo from Korea Economy Daily) |

So, what is deep fake technology? It is a process that uses a machine learning technology called ‘Generative Adversarial Network (GAN)’ to create a photo or video that is not real by superimposing images on the original. When you input the facial expressions, habits, and voices of a specific target, AI learns how to create a video of that target making it impossible to distinguish between what is real or fake. Developing a deep fake video or photo requires several hundreds or even thousands of images added into an artificial neural network, a computer system that is able to recognize patters in data, and reconstruct the images into a single face using an expression we choose.

Deep fake technology functions as a two-sided coin. The bright side is that it supports the entertainment industry in the production of movies and dramas. Using this technology, it is possible to produce vivid images without really filming them live. In addition, deep fake technology embodies and expresses objects that we cannot see before us, such as historical figures and people we miss. For example, in Korea, the faces of Yoo Gwan-soon, Yoon Bong-gil, and Ahn Jung-geun, who led Korea's independence, were recently brought back to life using the deep fake technology available on the website MyHeritage. MyHeritage is a website that finds, shares, and preserves family history. It is available to users as an online, mobile and software platform. Users can create their own genealogy and find their ancestors among billions of historical records. People reacted to the software by saying, "Deep fake technology has never looked so brilliant before. I agree with these uses of deep fake technology."

However, the downside to the readily available technology is also real. There has been an increase in the number of digital sex crimes produced using deep fake technology. One of the perpetrators of the Telegram Narcotics Rooms Incident demonstrated how by using the software they are able to synthesize and distribute the faces of acquaintances into pornographic materials. The negative aspects of deep fake technology have become a serious social problem around the world. According to the Dutch cybersecurity research company DeepTrace, 96% of the 14,698 deep fake videos distributed over the last year were obscene materials. Furthermore, fake news using deep fake technology has caused great confusion across international societies. Fake news became a hot topic when a manipulated video of President Barack Obama demonstrating a vitriol attitude toward President Donald Trump was posted on social media. It was later revealed to be a video made by Jordan Pill, a film director who wanted to publicize the potential dangers of deep fake technology. These events reminded people that deep fake technology could be abused for political purposes and is likely to result in the spread of fake news.

|

| ▲ A deep fake video composed of images of former U.S. president Barack Obama in a video of Jordan Pill, a Hollywood filmmaker, being manipulated as if he was insulting president Donald Trump. (Photo from Kyunghyang Shinmun) |

It is clear that there are two sides to deep fake technology, but what lies ahead in the future of this software? ‘Deep Nostalgia’ is a service that uses deep fake technology to bring back to life former loved ones by adding movement and sound to photos. This program was created by the authors of the website MyHeritage who uses AI technology from an Israeli artificial intelligence company, D-ID to create the memorial images. The service is available after signing up for a membership on the MyHeritage website. Users can post up to five pictures of people they want to see brought back to life for free with their membership. Photos uploaded by individuals are secure and not shared across the platform for those concerned about personal information. They are automatically deleted once the service is complete. MyHeritage is fully aware of the potential for its abuse, so it is not only warning the users not to post pictures of those who are currently alive, but prevents them from adding their voices to the images due to possible abuse or manipulation of the process.

This type of service is intended to give us a vivid image of figures from the past and offer a great comfort to those who have lost precious people around them. While Deep Nostalgia is an example of a good use of deep fake technology, the dangers of it being exploited causing tremendous social confusion are real. As such, active discussions on the proper use and control of deep fake technology should continue, so that it can be used in a positive way, that enhances our lives. Deep fake technology can give someone a vivid representation a person they miss and help the film and television industry with their productions. However, it is a technology that will have a huge social impact if it is used to create false images. As such, this technology has two sides. If we are not concerned about the abuse of deep fakes, we are voluntarily taking the first step toward an era where we can no longer distinguish between what is true and false. This is not a world where societies can live freely, so active discussions on the control of its use are necessary. Only then can it genuinely become a useful technology for everyone.

서영진, 김민경, 오유준 dankookherald@gmail.com

![[Campus Magnifier] Let's Surf the Library!](/news/photo/202404/12496_1765_4143.jpg) [Campus Magnifier] Let's Surf the Library!

[Campus Magnifier] Let's Surf the Library!

![[Campus Magnifier] Let's Surf the Library!](/news/thumbnail/202404/12496_1765_4143_v150.jpg)